Whether you are a content creator, event manager, meeting organiser, or busy professional, adding subtitles or closed captions to your live content and videos is a game-changer.

A recent study by UK charity Stagetext reports that four out of five young people use subtitles when they watch TV. The charity's research further suggested an average of 31% of people would go to more live events if more had captions on a screen in the venue.

Subtitling and captioning have begun to shape a new norm of how people consume videos and live content. But as soon as words start appearing on video screens, many people tend to use the terms captions and subtitles interchangeably.

Let's look at subtitles and captions in-depth: What are they, and how do they differ?

In this article, we’re going to look at the differences between subtitles, and captioning, and what their best use case is.

In this article:

1. Captioning

1.3. Where captions can be used

1.4. How people access captions

1.6. How captions are generated

2. Subtitles

2.3. The benefits of subtitles

2.4. Where subtitles can be used

2.5 How subtitles are generated

3. The differences between captions and subtitles

Captioning

What is captioning?

Captioning is the process of transcribing audio content (of a television broadcast, film, video, live event, or other production) into text as transcription and displaying that text on a screen, monitor, or other visual display.

What are the benefits of captions?

Essentially, captions benefit everyone. As mentioned earlier, a vast majority of younger people use captions for one reason or another. Captions provide a visual aid to follow the audio content with visual reinforcement. Especially useful for people with hearing impairment, captions are also popular to watch content while being in a noisy environment.

More than 100 empirical studies document that captioning a video improves comprehension of, attention to, and memory of the video.

In a nutshell, captions can help:

- Improve comprehension

- Support the deaf and hearing-impaired

- Support people, who don't understand the original audio language

- Provide more options for media consumption

Recommended article

Did you know that 80% of Netflix subscribers use captions regularly?

Discover 5 surprising captioning facts →

Where can captions be used?

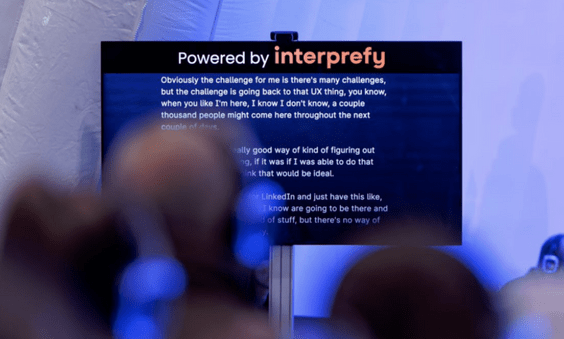

Captions are increasingly popular for live events, such as webinars, presentations, or conferences, providing a visual aid to follow the speech.

Some might have difficulty hearing — so adding captions makes it easy for them to read what’s being said. Others might prefer reading over listening because they might be in a noisy environment such as a café or on public transport.

Captions make it easier to catch and keep your audience’s attention. It’s much easier to follow what’s going on (and validate what’s being said) if there are captions.

How do people access captions?

The captions are synchronized with the audio so that they appear as the audio is delivered. There are different methods to create captions: off-line, if they are created and added after a video segment has been recorded and before it is aired or played, or online, when they are created in real-time, at the time of content origination.

What are the two types of captions?

- Closed Captions (CC): This type of captions provides users with the capability to activate when they need them and deactivate if they’re not required.

- Open Captions: These are part of the video itself and cannot be turned off.

How are captions generated?

Captions can also cater to multilingual audiences by making the speech available as live transcriptions in other languages than the speakers':

- Automated Speech Recognition (ASR) technology: Automated Speech Recognition (ASR) technology, a form of Artificial Intelligence( AI), automatically transcribes the audio speech into written text that appears in, or next to the video - in real-time. In events with simultaneous interpretation, this works for both the audio of the speaker, as well as for the audio of interpreters who interpret the speech in real-time.

- Human captioning: Captioners, often also referred to as captionists, or stenographers, can transcribe spoken words into text by listening to the speech and typing simultaneously.

- Machine-translated ASR captions: Much like the above, AI technology transcribes the speech live but then renders the text into another written language through machine translation.

Recommended article

How accurate are closed captions in Zoom, Teams, and Interprefy?

Subtitles

What are subtitles?

If you are a foreign movie aficionado, odds are you're a regular user of subtitles.

Subtitles translate a video dialogue into other languages so that audiences all over the world can watch videos and movies even when they don't understand the language spoken. Subtitles are text derived from a transcript of the dialogue in films, TV programs, or video games.

Subtitles are usually displayed either at the bottom of the screen or at the top of the screen if there is already text at the bottom of the screen.

What are the different types of subtitles?

- Standard subtitles: Standard subtitles are designed for viewers who hear the audio but cannot understand the language. They can either be closed or open - open meaning they are embedded into the video file and closed meaning users can turn them on or off at their own discretion.

- Subtitles for the Deaf and Hard of Hearing (SDHH): SDHH are specifically designed to support hearing-impaired individuals: SDHH contains not only the spoken dialogue but information about background sounds and speaker changes, along with a translation of the script.

What are the benefits of using subtitles?

Like captions, subtitles in videos are great for a number of reasons:

- Accessibility - Subtitles make videos widely accessible, both for the deaf and hard-of-hearing, as well as people who don't understand the spoken dialogue.

- SEO - When attached to your video as a file (e.g. SRT or VTT format), Google can read your subtitles and increase the chances of your content ranking higher.

- Engagement - A recent study suggests that 85% of videos watched on Facebook are on mute. Viewers are more inclined to watch your videos if they provide a great user experience.

- International reach - Subtitles make it possible to make video content available to people who would otherwise not understand your content.

Where can subtitles be used?

Because subtitles are designed for people who can hear the audio but don't understand it, they are mainly used in movies and TV series amongst those who like watching content in its original version but cannot understand it. Also, often they are produced and synchronized before the video is released and most on-demand video streaming platforms include them.

How are subtitles generated?

Often, subtitles are translated by professional translators before they are added to videos, movies and the like. Advancements in technology have also made it possible now to generate subtitles automatically in real time.

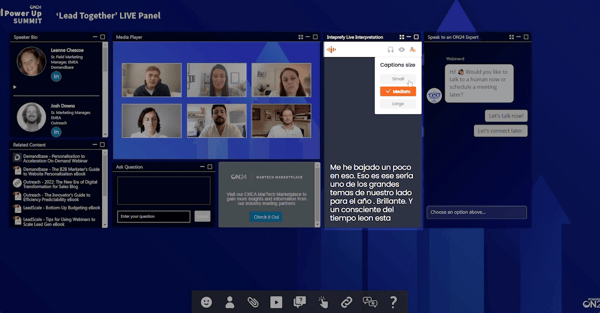

For live events, webinars, business meetings, or conferences, subtitles can be produced automatically by utilizing a combination of Automated Speech Recognition and Machine Translation technology.

Captions vs subtitles - what is the difference?

Contrary to popular belief, the two are not synonymous. While captions are designed to support the hard of hearing, subtitles are translations for people who don’t speak the language of the content. They are often used for movies and TV shows and are typically developed before the release of a film or show.

Captions and subtitles - blurring the lines

We've established that the key difference between captions and subtitles lies in their distinct purpose. But with advances in technology, that add live translation solutions to captioning capabilities, the lines between captions and subtitles become increasingly blurred.

Bridging the language gap with the right process and technology

Simultaneous interpretation —on-site or remote—, translation, captioning and subtitles all fulfill the purpose of reducing communication barriers. Deciding which one to use depends mainly on your type of content and user needs.

When it comes to deciding on the technology, besides user needs, setting and budget also play a key role. There are services that event managers can use to get access to interpreters and human-interpreted live captions in virtual and hybrid setups. Platforms such as Interprefy enable real-time simultaneous interpretation and live captioning on any event type and meeting empowering event managers to deliver content in a variety of languages.

More download links

More download links