Zoom and Teams both have an appealing feature that allows meeting attendees to activate closed captioning. This tool provides users with valuable visual assistance for following the sessions by automatically creating a live transcription of what is being said.

Both Teams and Zoom use automated speech recognition algorithms to transcribe the speech in real-time. These are completely automated, with little to no preparation required from the meeting host.

How accurate are Zoom captions?

Zoom offers two ways to add closed captioning to your Zoom meetings and webinars. In meetings, the host can assign manual captioning to a meeting guest using an integrated third-party closed captioning provider. Zoom also has an automated captioning capability that can be turned on and off without any additional work from the meeting host.

Zoom's automatic closed captioning is estimated to deliver around 80% accuracy.

How accurate are Teams captions?

Users can enable live captions during Microsoft Teams meetings, which display immediately beneath the video feed. Research suggests these captions can achieve an accuracy of 85%-90%.

Where standard speech-to-text engines fail

Both platforms can offer sufficient captioning quality, to aid in getting an understanding of what is being said. However, most automated speech recognition systems fail when speakers use uncommon words or phrases. For example, distinctive brand names or less popular names with alternate spellings.

AI-powered speech-to-text engines are predictive by nature. If a term is not in a standard dictionary and is not used frequently or at all in informal conversation, the engines will not anticipate them in your sessions.

Recommended article

Did you know that 80% of Netflix subscribers use captions regularly?

Discover 5 surprising captioning facts →

How engine optimisation can increase the quality

More advanced AI-powered captioning systems, such as Interprefy Captions, can be tailored to include significant and uncommon words and phrases that normal engines would overlook.

This is accomplished by customising the system to include keywords that are important to your session.

By entering these terms into the system beforehand, the system will be aware of their existence, able to detect them, and correctly transcribe them when they arise during a session.

The following are examples of terms that speech-to-text systems frequently miss:

- Names of people, the speakers, key people in the organisation, or the field

- Names of technologies, products, or services

- Brand names

- Acronyms and abbreviations

- Uncommon terms like technical expressions, specialist terms, or jargon

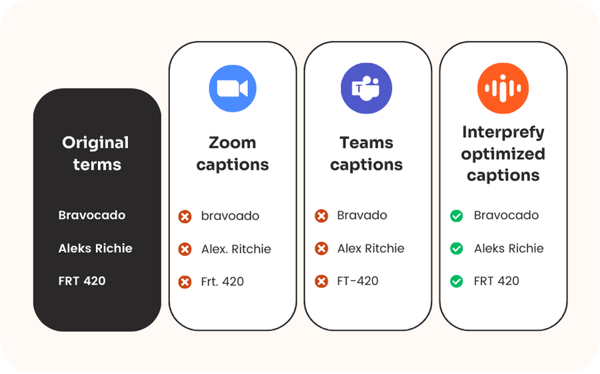

Captions quality comparison

Let's test the engines right away. Using the automatic captions for the same statement in Teams, Zoom, and Interprefy, we compare the three methods side by side.

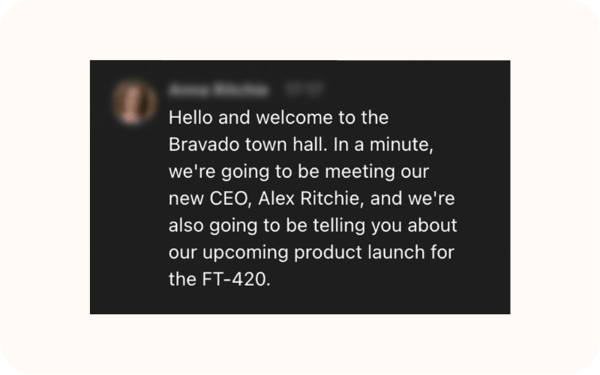

Imagine a company named "Bravocado." Bravocado intends to have a company-wide town hall meeting to present their new CEO, Aleks Ritchie, and to launch their latest product, the FRT 420.

What follows are the transcriptions of the opening remarks in MS Teams, Zoom, and Interprefy.

|

Original script Hello and welcome to the Bravocado Town Hall. In a minute, we’re going to be meeting our new CEO, Aleks Richie, and we're also going to be telling you about our upcoming product launch for the FRT 420. |

The following are significant terms that are relevant to the event but are not typically identified by AI engines:

- Bravocado

- Aleks Richie

- FRT 420

Let's now examine the captions that are displayed on each platform during a meeting with the exact same spoken sentences.

Microsoft Teams captions output

Microsoft Teams will be our first stop. The identical words above were spoken aloud and clearly while participating in a Microsoft Teams meeting and with the automatic captioning option turned on.

This is the result:

As we can see, Microsoft's engine provided sufficient quality to get an understanding but missed the three important keywords.

| Original term | Teams output | |

| Bravocado | → | Bravado |

| Aleks Richie | → | Alex Ritchie |

| FRT-420 | → | FT-420 |

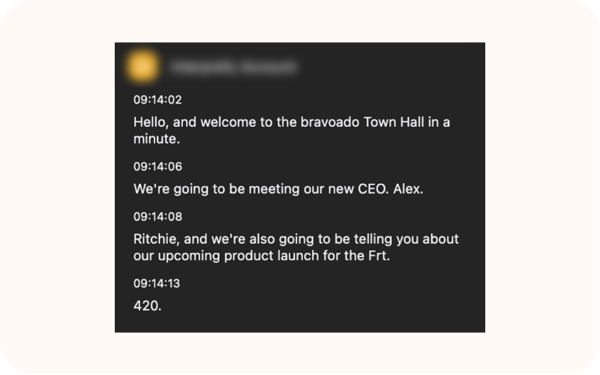

Zoom captions output

Let's now explore Zoom's captioning function. We followed the same procedure, joining a Zoom meeting, turning on Zoom captions, and speaking the words clearly and aloud.

This is the result:

Zoom's outcomes varied slightly from Teams'. The punctuation and sentence structure are a little incorrect, and Zoom also misidentified some key terms.

| Original term | Zoom output | |

| Bravocado | → | bravoado |

| Aleks Richie | → | Alex. Ritchie |

| FRT-420 | → | Frt. 420 |

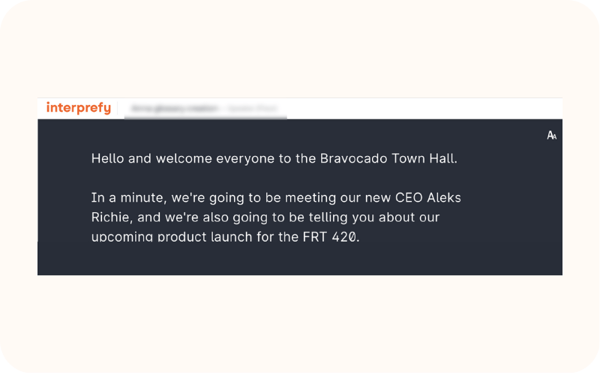

Interprefy captions output with engine optimisation

While Zoom and Teams deliver raw output automatically based on their usual training data, Interprefy goes a step further by optimising the speech-to-text engine. This is achieved by improving the system with key terms that are unique and extremely relevant to your session.

This was the result:

As we can see, all three key terms were captured accurately by Interprefy's captioning system, after the engine was optimised.

In summary

Standard AI engines can provide captions that are useful for gaining a general sense of what is being spoken. In our experiment, we showed that if the systems were not equipped to pay special attention to specific terminology, they all missed the three primary words we were looking for.

If "good enough" suffices, Zoom and Teams captions may be a viable option for providing users with a tool that assists them in gaining a basic understanding. However, depending on the context and relevancy of your event, adopting a system that will almost certainly misspell crucial phrases may be a risky choice.

Specialised captioning systems like Interprefy Captions can help you to improve accuracy beyond the standard. And the best bit is that they can be added to your Zoom webinars, Teams meetings, or any other meeting platform you're using, so you can take your captioning experience to the next level anywhere.

.webp?width=468&quality=high)

More download links

More download links